- Parent-child pipelines

- Multi-project pipelines

-

Trigger a downstream pipeline from a job in the

.gitlab-ci.ymlfile - Trigger a multi-project pipeline by using the API

- View a downstream pipeline

- Fetch artifacts from an upstream pipeline

- Pass CI/CD variables to a downstream pipeline

- Troubleshooting

Downstream pipelines

A downstream pipeline is any GitLab CI/CD pipeline triggered by another pipeline. Downstream pipelines run independently and concurrently to the upstream pipeline that triggered them.

- A parent-child pipeline is a downstream pipeline triggered in the same project as the first pipeline.

- A multi-project pipeline is a downstream pipeline triggered in a different project than the first pipeline.

You can sometimes use parent-child pipelines and multi-project pipelines for similar purposes, but there are key differences.

Parent-child pipelines

A parent pipeline is a pipeline that triggers a downstream pipeline in the same project. The downstream pipeline is called a child pipeline.

Child pipelines:

- Run under the same project, ref, and commit SHA as the parent pipeline.

- Do not directly affect the overall status of the ref the pipeline runs against. For example,

if a pipeline fails for the main branch, it’s common to say that “main is broken”.

The status of child pipelines only affects the status of the ref if the child

pipeline is triggered with

strategy:depend. - Are automatically canceled if the pipeline is configured with

interruptiblewhen a new pipeline is created for the same ref. - Are not displayed in the project’s pipeline list. You can only view child pipelines on their parent pipeline’s details page.

Nested child pipelines

- Introduced in GitLab 13.4.

- Feature flag removed in GitLab 13.5.

Parent and child pipelines have a maximum depth of two levels of child pipelines.

A parent pipeline can trigger many child pipelines, and these child pipelines can trigger their own child pipelines. You cannot trigger another level of child pipelines.

For an overview, see Nested Dynamic Pipelines.

Multi-project pipelines

A pipeline in one project can trigger downstream pipelines in another project, called multi-project pipelines. The user triggering the upstream pipeline must be able to start pipelines in the downstream project, otherwise the downstream pipeline fails to start.

Multi-project pipelines:

- Are triggered from another project’s pipeline, but the upstream (triggering) pipeline does not have much control over the downstream (triggered) pipeline. However, it can choose the ref of the downstream pipeline, and pass CI/CD variables to it.

- Affect the overall status of the ref of the project it runs in, but does not

affect the status of the triggering pipeline’s ref, unless it was triggered with

strategy:depend. - Are not automatically canceled in the downstream project when using

interruptibleif a new pipeline runs for the same ref in the upstream pipeline. They can be automatically canceled if a new pipeline is triggered for the same ref on the downstream project. - Are visible in the downstream project’s pipeline list.

- Are independent, so there are no nesting limits.

Learn more in the “Cross-project Pipeline Triggering and Visualization” demo at GitLab@learn, in the Continuous Integration section.

If you use a public project to trigger downstream pipelines in a private project, make sure there are no confidentiality problems. The upstream project’s pipelines page always displays:

- The name of the downstream project.

- The status of the pipeline.

Trigger a downstream pipeline from a job in the .gitlab-ci.yml file

Use the trigger keyword in your .gitlab-ci.yml file

to create a job that triggers a downstream pipeline. This job is called a trigger job.

For example:

::Tabs

:::TabTitle Parent-child pipeline

trigger_job:

trigger:

include:

- local: path/to/child-pipeline.yml

:::TabTitle Multi-project pipeline

trigger_job:

trigger:

project: project-group/my-downstream-project

::EndTabs

After the trigger job starts, the initial status of the job is pending while GitLab

attempts to create the downstream pipeline. The trigger job shows passed if the

downstream pipeline is created successfully, otherwise it shows failed. Alternatively,

you can set the trigger job to show the downstream pipeline’s status

instead.

Use rules to control downstream pipeline jobs

Use CI/CD variables or the rules keyword to

control job behavior in downstream pipelines.

When you trigger a downstream pipeline with the trigger keyword,

the value of the $CI_PIPELINE_SOURCE predefined variable

for all jobs is:

-

pipelinefor multi-project pipelines. -

parent_pipelinefor parent-child pipelines.

For example, to control jobs in multi-project pipelines in a project that also runs merge request pipelines:

job1:

rules:

- if: $CI_PIPELINE_SOURCE == "pipeline"

script: echo "This job runs in multi-project pipelines only"

job2:

rules:

- if: $CI_PIPELINE_SOURCE == "merge_request_event"

script: echo "This job runs in merge request pipelines only"

job3:

rules:

- if: $CI_PIPELINE_SOURCE == "pipeline"

- if: $CI_PIPELINE_SOURCE == "merge_request_event"

script: echo "This job runs in both multi-project and merge request pipelines"

Use a child pipeline configuration file in a different project

Introduced in GitLab 13.5.

You can use include:project in a trigger job

to trigger child pipelines with a configuration file in a different project:

microservice_a:

trigger:

include:

- project: 'my-group/my-pipeline-library'

ref: 'main'

file: '/path/to/child-pipeline.yml'

Combine multiple child pipeline configuration files

You can include up to three configuration files when defining a child pipeline. The child pipeline’s configuration is composed of all configuration files merged together:

microservice_a:

trigger:

include:

- local: path/to/microservice_a.yml

- template: Security/SAST.gitlab-ci.yml

- project: 'my-group/my-pipeline-library'

ref: 'main'

file: '/path/to/child-pipeline.yml'

Dynamic child pipelines

You can trigger a child pipeline from a YAML file generated in a job, instead of a static file saved in your project. This technique can be very powerful for generating pipelines targeting content that changed or to build a matrix of targets and architectures.

The artifact containing the generated YAML file must not be larger than 5 MB.

For an overview, see Create child pipelines using dynamically generated configurations.

For an example project that generates a dynamic child pipeline, see

Dynamic Child Pipelines with Jsonnet.

This project shows how to use a data templating language to generate your .gitlab-ci.yml at runtime.

You can use a similar process for other templating languages like

Dhall or ytt.

Trigger a dynamic child pipeline

To trigger a child pipeline from a dynamically generated configuration file:

-

Generate the configuration file in a job and save it as an artifact:

generate-config: stage: build script: generate-ci-config > generated-config.yml artifacts: paths: - generated-config.yml -

Configure the trigger job to run after the job that generated the configuration file, and set

include: artifactto the generated artifact:child-pipeline: stage: test trigger: include: - artifact: generated-config.yml job: generate-config

In this example, GitLab retrieves generated-config.yml and triggers a child pipeline

with the CI/CD configuration in that file.

The artifact path is parsed by GitLab, not the runner, so the path must match the

syntax for the OS running GitLab. If GitLab is running on Linux but using a Windows

runner for testing, the path separator for the trigger job is /. Other CI/CD

configuration for jobs that use the Windows runner, like scripts, use \.

Run child pipelines with merge request pipelines

To trigger a child pipeline as a merge request pipeline:

-

Set the trigger job to run on merge requests in the parent pipeline’s configuration file:

microservice_a: trigger: include: path/to/microservice_a.yml rules: - if: $CI_PIPELINE_SOURCE == "merge_request_event" -

Configure the child pipeline jobs to run in merge request pipelines with

rulesorworkflow:rules. For example, withrulesin a child pipeline’s configuration file:job1: script: echo "Child pipeline job 1" rules: - if: $CI_MERGE_REQUEST_ID job2: script: echo "Child pipeline job 2" rules: - if: $CI_MERGE_REQUEST_IDIn child pipelines,

$CI_PIPELINE_SOURCEalways has a value ofparent_pipelineand cannot be used to identify merge request pipelines. Use$CI_MERGE_REQUEST_IDinstead, which is always present in merge request pipelines.

Specify a branch for multi-project pipelines

You can specify the branch to use when triggering a multi-project pipeline. GitLab uses the commit on the head of the branch to create the downstream pipeline. For example:

staging:

stage: deploy

trigger:

project: my/deployment

branch: stable-11-2

Use:

- The

projectkeyword to specify the full path to the downstream project. In GitLab 15.3 and later, you can use variable expansion. - The

branchkeyword to specify the name of a branch or tag in the project specified byproject. You can use variable expansion.

Trigger a multi-project pipeline by using the API

You can use the CI/CD job token (CI_JOB_TOKEN) with the

pipeline trigger API endpoint

to trigger multi-project pipelines from inside a CI/CD job. GitLab sets pipelines triggered

with a job token as downstream pipelines of the pipeline that contains the job that

made the API call.

For example:

trigger_pipeline:

stage: deploy

script:

- curl --request POST --form "token=$CI_JOB_TOKEN" --form ref=main "https://gitlab.example.com/api/v4/projects/9/trigger/pipeline"

rules:

- if: $CI_COMMIT_TAG

environment: production

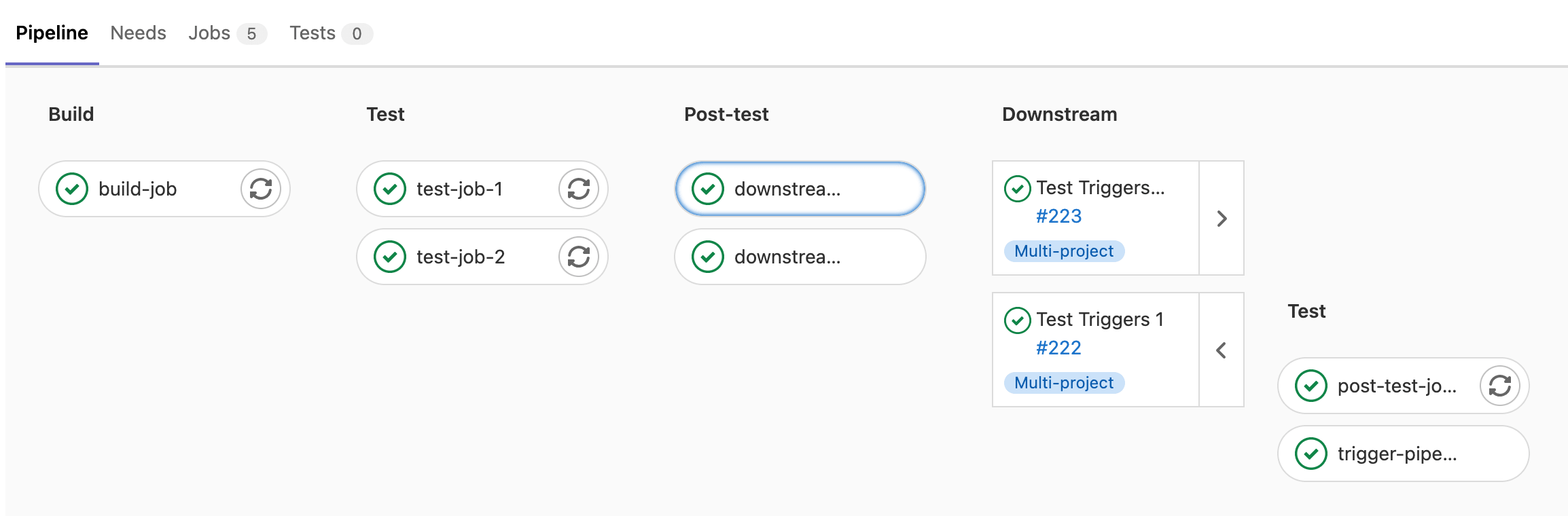

View a downstream pipeline

Hover behavior for pipeline cards introduced in GitLab 13.2.

In the pipeline graph view, downstream pipelines display as a list of cards on the right of the graph. Hover over the pipeline’s card to view which job triggered the downstream pipeline.

Retry a downstream pipeline

- Retry from graph view introduced in GitLab 15.0 with a flag named

downstream_retry_action. Disabled by default. - Retry from graph view generally available and feature flag removed in GitLab 15.1.

To retry a completed downstream pipeline, select Retry ():

- From the downstream pipeline’s details page.

- On the pipeline’s card in the pipeline graph view.

Cancel a downstream pipeline

- Retry from graph view introduced in GitLab 15.0 with a flag named

downstream_retry_action. Disabled by default. - Retry from graph view generally available and feature flag removed in GitLab 15.1.

To cancel a downstream pipeline that is still running, select Cancel ():

- From the downstream pipeline’s details page.

- On the pipeline’s card in the pipeline graph view.

Mirror the status of a downstream pipeline in the trigger job

You can mirror the status of the downstream pipeline in the trigger job

by using strategy: depend:

::Tabs

:::TabTitle Parent-child pipeline

trigger_job:

trigger:

include:

- local: path/to/child-pipeline.yml

strategy: depend

:::TabTitle Multi-project pipeline

trigger_job:

trigger:

project: my/project

strategy: depend

::EndTabs

View multi-project pipelines in pipeline graphs

After you trigger a multi-project pipeline, the downstream pipeline displays to the right of the pipeline graph.

In pipeline mini graphs, the downstream pipeline displays to the right of the mini graph.

Fetch artifacts from an upstream pipeline

Use needs:project to fetch artifacts from an

upstream pipeline:

-

In the upstream pipeline, save the artifacts in a job with the

artifactskeyword, then trigger the downstream pipeline with a trigger job:build_artifacts: stage: build script: - echo "This is a test artifact!" >> artifact.txt artifacts: paths: - artifact.txt deploy: stage: deploy trigger: my/downstream_project -

Use

needs:projectin a job in the downstream pipeline to fetch the artifacts.test: stage: test script: - cat artifact.txt needs: - project: my/upstream_project job: build_artifacts ref: main artifacts: trueSet:

-

jobto the job in the upstream pipeline that created the artifacts. -

refto the branch. -

artifactstotrue.

-

Fetch artifacts from an upstream merge request pipeline

When you use needs:project to pass artifacts to a downstream pipeline,

the ref value is usually a branch name, like main or development.

For merge request pipelines, the ref value is in the form of refs/merge-requests/<id>/head,

where id is the merge request ID. You can retrieve this ref with the CI_MERGE_REQUEST_REF_PATH

CI/CD variable. Do not use a branch name as the ref with merge request pipelines,

because the downstream pipeline attempts to fetch artifacts from the latest branch pipeline.

To fetch the artifacts from the upstream merge request pipeline instead of the branch pipeline,

pass CI_MERGE_REQUEST_REF_PATH to the downstream pipeline using variable inheritance:

- In a job in the upstream pipeline, save the artifacts using the

artifactskeyword. -

In the job that triggers the downstream pipeline, pass the

$CI_MERGE_REQUEST_REF_PATHvariable:build_artifacts: stage: build script: - echo "This is a test artifact!" >> artifact.txt artifacts: paths: - artifact.txt upstream_job: variables: UPSTREAM_REF: $CI_MERGE_REQUEST_REF_PATH trigger: project: my/downstream_project branch: my-branch -

In a job in the downstream pipeline, fetch the artifacts from the upstream pipeline by using

needs:projectand the passed variable as theref:test: stage: test script: - cat artifact.txt needs: - project: my/upstream_project job: build_artifacts ref: $UPSTREAM_REF artifacts: true

You can use this method to fetch artifacts from upstream merge request pipeline, but not from merge results pipelines.

Pass CI/CD variables to a downstream pipeline

You can pass CI/CD variables to a downstream pipeline with a few different methods, based on where the variable is created or defined.

Pass YAML-defined CI/CD variables

You can use the variables keyword to pass CI/CD variables to a downstream pipeline.

These variables are “trigger variables” for variable precedence.

For example:

::Tabs

:::TabTitle Parent-child pipeline

variables:

VERSION: "1.0.0"

staging:

variables:

ENVIRONMENT: staging

stage: deploy

trigger:

include:

- local: path/to/child-pipeline.yml

:::TabTitle Multi-project pipeline

variables:

VERSION: "1.0.0"

staging:

variables:

ENVIRONMENT: staging

stage: deploy

trigger: my-group/my-deployment-project

::EndTabs

The ENVIRONMENT variable is available in every job defined in the downstream pipeline.

The VERSION global variable is also available in the downstream pipeline, because

all jobs in a pipeline, including trigger jobs, inherit global variables.

Prevent global variables from being passed

You can stop global CI/CD variables from reaching the downstream pipeline with

inherit:variables:false.

For example:

::Tabs

:::TabTitle Parent-child pipeline

variables:

GLOBAL_VAR: value

trigger-job:

inherit:

variables: false

variables:

JOB_VAR: value

trigger:

include:

- local: path/to/child-pipeline.yml

:::TabTitle Multi-project pipeline

variables:

GLOBAL_VAR: value

trigger-job:

inherit:

variables: false

variables:

JOB_VAR: value

trigger: my-group/my-project

::EndTabs

The GLOBAL_VAR variable is not available in the triggered pipeline, but JOB_VAR

is available.

Pass a predefined variable

To pass information about the upstream pipeline using predefined CI/CD variables. use interpolation. Save the predefined variable as a new job variable in the trigger job, which is passed to the downstream pipeline. For example:

::Tabs

:::TabTitle Parent-child pipeline

trigger-job:

variables:

PARENT_BRANCH: $CI_COMMIT_REF_NAME

trigger:

include:

- local: path/to/child-pipeline.yml

:::TabTitle Multi-project pipeline

trigger-job:

variables:

UPSTREAM_BRANCH: $CI_COMMIT_REF_NAME

trigger: my-group/my-project

::EndTabs

The UPSTREAM_BRANCH variable, which contains the value of the upstream pipeline’s $CI_COMMIT_REF_NAME

predefined CI/CD variable, is available in the downstream pipeline.

Do not use this method to pass masked variables to a multi-project pipeline. The CI/CD masking configuration is not passed to the downstream pipeline and the variable could be unmasked in job logs in the downstream project.

You cannot use this method to forward job-level persisted variables to a downstream pipeline, as they are not available in trigger jobs.

Upstream pipelines take precedence over downstream ones. If there are two variables with the same name defined in both upstream and downstream projects, the ones defined in the upstream project take precedence.

Pass dotenv variables created in a job

You can pass variables to a downstream pipeline with dotenv variable inheritance

and needs:project.

For example, in a multi-project pipeline:

- Save the variables in a

.envfile. - Save the

.envfile as adotenvreport. -

Trigger the downstream pipeline.

build_vars: stage: build script: - echo "BUILD_VERSION=hello" >> build.env artifacts: reports: dotenv: build.env deploy: stage: deploy trigger: my/downstream_project -

Set the

testjob in the downstream pipeline to inherit the variables from thebuild_varsjob in the upstream project withneeds. Thetestjob inherits the variables in thedotenvreport and it can accessBUILD_VERSIONin the script:test: stage: test script: - echo $BUILD_VERSION needs: - project: my/upstream_project job: build_vars ref: master artifacts: true

Troubleshooting

Trigger job fails and does not create multi-project pipeline

With multi-project pipelines, the trigger job fails and does not create the downstream pipeline if:

- The downstream project is not found.

- The user that creates the upstream pipeline does not have permission to create pipelines in the downstream project.

- The downstream pipeline targets a protected branch and the user does not have permission to run pipelines against the protected branch. See pipeline security for protected branches for more information.

Ref is ambiguous

You cannot trigger a multi-project pipeline with a tag when a branch exists with the same

name. The downstream pipeline fails to create with the error: downstream pipeline can not be created, Ref is ambiguous.

Only trigger multi-project pipelines with tag names that do not match branch names.